Whenever you put your game out into the wild, you can never be quite sure what you’re going to learn from it.

The Jetsam v0.2 Beta was no exception.

The Beta Data

This time around, we were prepared to gather hard data through an in-app logging call that dumped delicious data into a Google Spreadsheet every time a player successfully completed a level – and every time they failed.

From this data, we were able to capture some interesting statistics. As of the time of writing, the Jetsam Level Editor Beta had:

- 48 Unique Players

- 219 Unique Levels Played

- 91 Hints Used

- 1573 Unique Play Sessions

- 4 distinct versions:

- v0.2.0

- v0.2.1

- v0.2.2

- v0.2.3

Identifying Difficulty Cliffs

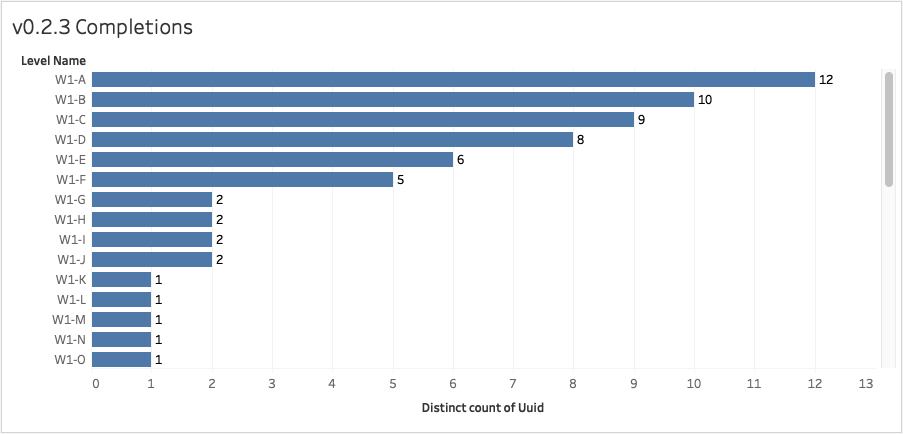

Given the linear structure of our game, our expectation was that level completion numbers would start high at the beginning of each world and dwindle as the levels got progressively harder – but actual completion numbers told a different story.

We had ourselves a cliff – the dropoff from W1-D to W1-E was severe, over 33%. Were people getting bored with our game after four levels? Was the difficulty ramping too quickly? We asked these questions of our testers, many of whom corroborated the common complaint that “it got too hard, too fast.”

We dug into failure data to see if that complaint held water – and lo and behold, we found that 11 of 17 unique W1-E players failed at least once when playing it!

- W1-E – does it look difficult to you?

Armed with quantitative confirmation of our qualitative feedback, we redesigned several levels from W1-E onward and launched a new version, which performed thusly:

This time around, the dropoff between 1-D and 1-E was far less dramatic. Failure data also bears this out, as 0 of 6 unique W1-E players failed it in this later trial. Chalk one up to the power of data to make our level design better!

Hint Optimization

While not really the objective of our Beta test, we tracked the number of people who used Hints to get past tough levels, and reached an interesting conclusion: almost no one was using them (only 3 unique users had even bothered). This made intuitive sense, as the Hint menu wasn’t an obvious part of the UI by any means.

As a result, in v0.2.3 we added a Hint Tutorial that draws attention to the Hint menu in the UI after a player retries a level for the first time. Subsequently, Hint usage went up 75%. That simple little tweak has opened our mind to future experiments we want to perform around Hints in the v0.3 Beta.

Device Profiling

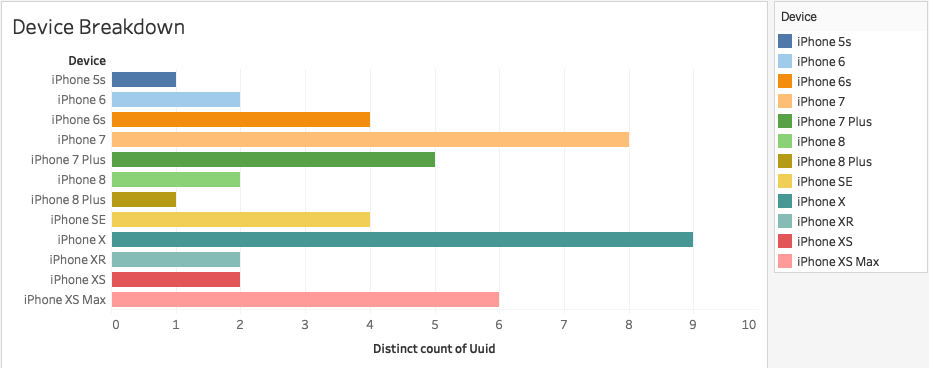

Since we’re launching our game on iOS, we figured we’d capture data about the devices our players were using.

Perhaps unsurprisingly, the iPhone X led the pack – but the iPhone 7 had a strong second-place showing.

Most surprisingly of all, no one played our game on an iPad, even though we have Universal iOS device targeting set up. Maybe that means we should spend less time making our game iPad-friendly?

Conclusions

Running an open-ish Beta for nearly 50 people was a lot of fun, and taught us tons about our game’s weak points. Being able to comb through the level completion and failure data really helped us tune the difficulty curve better, hopefully leading to stronger player retention. Identifying our Hint issue certainly saved us headaches later down the road with monetization. Realizing that there aren’t as many iPad users also helped us better prioritize our development backlog.

Onward to v0.3!

If you liked this blog post, show us some love by following us on Twitter. We’ll happily follow back.

Feel free to download the Jetsam Beta as well if you’re a fan of puzzle games!